Solutions

About Us

A seismic shift is occurring in the artificial intelligence industry, and it's happening largely beneath the surface of public awareness. While much of the AI conversation over the past few years has focused on training larger and more capable models, the real action and the real spending is now shifting decisively toward inference infrastructure.

The Numbers Are Clear

According to IDC, investment in AI inference infrastructure is expected to surpass spending on training infrastructure by the end of 2025. More tellingly, 65% of organizations will be running more than 50 generative AI use cases in production by 2025. Each of these use cases represents potentially thousands or millions of inference calls every day.

This isn't just a trend, it's a fundamental rebalancing of the AI ecosystem. Training happens once (or periodically). Inference happens continuously, at massive scale, in real-time, for every user interaction.

What Is AI Inference?

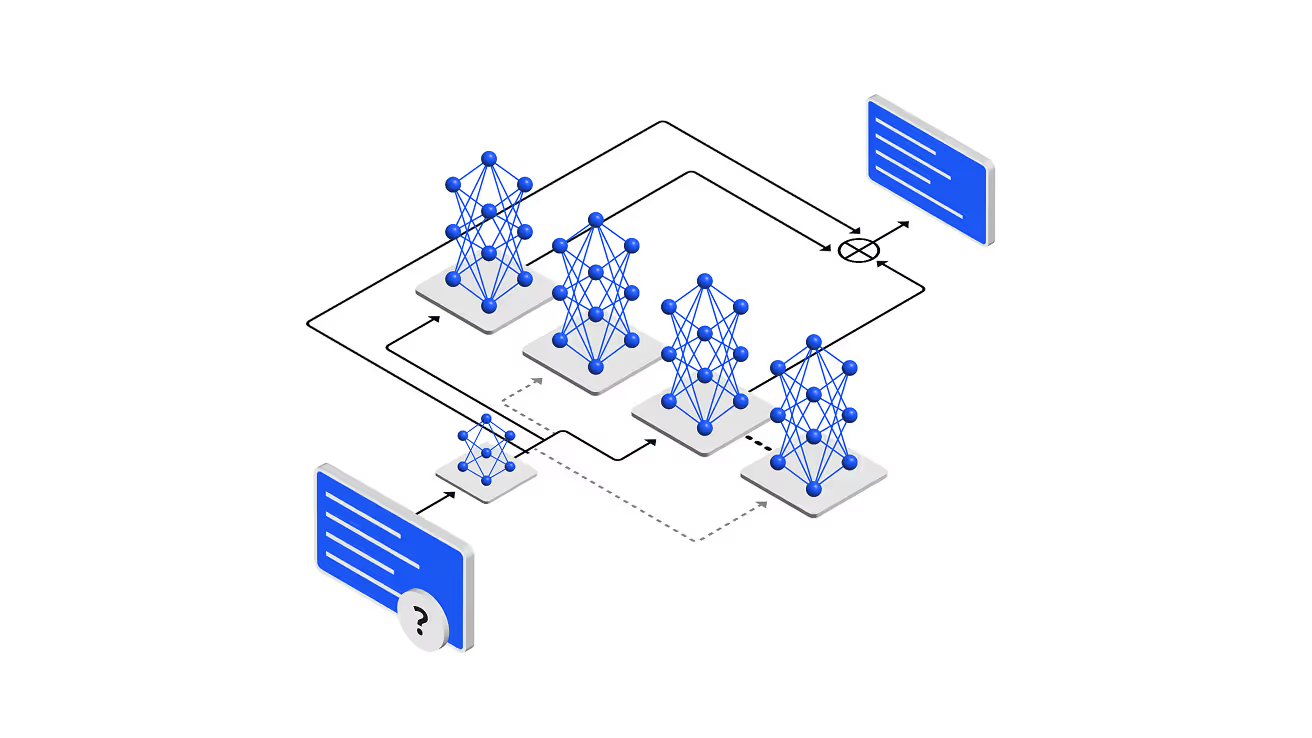

While training involves teaching an AI model by processing massive datasets, a computationally intensive process that can take weeks or months, inference is what happens when that trained model is actually used. Every time you interact with ChatGPT, use an AI-powered recommendation system, or rely on predictive maintenance alerts, you're triggering inference operations.

Inference must be fast, efficient, and scalable. Users expect immediate responses. Systems need to handle millions of concurrent requests. And unlike training, which can often be batched and scheduled, inference happens on-demand, in response to real-world events and user needs.

The Edge Computing Connection

One of the most significant developments in AI inference is the shift toward edge computing. Rather than routing every inference request to centralized cloud data centers, which introduces latency and bandwidth constraints, organizations are increasingly deploying AI models closer to where data is generated and decisions need to be made.

Akamai's recent launch of its Inference Cloud platform exemplifies this trend. Leveraging NVIDIA Blackwell AI infrastructure, the platform provides low-latency, real-time edge AI processing on a global scale. This enables applications that were previously impractical, including 8K video workflows, live video intelligence, and AI-powered recommendation engines that must respond in milliseconds.

Why Inference Infrastructure Differs from Training

Training infrastructure is optimized for raw computational throughput. You want to process enormous datasets as quickly as possible, and you can tolerate higher latency and power consumption because training happens in controlled data center environments.

Inference infrastructure has different priorities:

This has driven the development of specialized hardware optimized specifically for inference, including NVIDIA's Tensor cores, Google's TPUs, and various AI accelerators designed for edge deployment.

The Business Case

From a business perspective, the shift toward inference makes perfect sense. Training produces a capability; inference monetizes it. Every inference request represents actual value being delivered to customers, a recommendation made, a question answered, a prediction generated, a decision optimized.

As organizations deploy more AI applications in production, the cost of inference becomes a critical consideration. A model that costs $10 million to train but pennies to run millions of times is far more valuable than one that costs $1 million to train but dollars per inference.

Real-World Applications

The explosion in inference infrastructure is enabling entirely new classes of applications:

Looking Ahead

As we move deeper into 2025 and beyond, expect to see continued innovation in inference infrastructure. This includes more specialized hardware, advanced optimization techniques like model quantization and pruning, and increasingly sophisticated approaches to distributed inference across cloud and edge environments.

The AI training race may have captured headlines, but it's the inference infrastructure race that will determine which organizations successfully deploy AI at scale. The future of AI isn't just about building better models, it's about running them efficiently, everywhere, all the time.

We're accepting 2 more partners for Q1 2026 deployment.

20% discount off standard pricing

Priority deployment scheduling

Direct engineering team access

Input on feature roadmap

Commercial/industrial facility (25,000+ sq ft)

UAE, Middle East location or Pakistan

Ready to deploy within 60 days

Willing to provide feedback